RealSense 3d camera on Linux Mac OS X. infrared, calibration, point clouds

The soon to be released Intel RealSense 3d camera now has two working open source drivers. Intel’s adherence to the USB Video Class standard meant that drivers don’t need to be written from scratch, just tweaked to work with not yet standardized features to get basic functionality. The camera also has a proprietary interface with unknown capabilities that aren’t critical for it’s use as a depth capture device.

Pixel Formats

For the first two months of working with the camera I was only able to get one video format despite clearly enumerated video formats with different bits per pixel in the USB descriptors, and a list of the same 7 formats via the Linux kernel driver. I read almost all of the USB Video Class (UVC) standard, and checked the USB logs for every value of every struct as I came to them in the documentation. I was mostly looking for anything weird when setting up the infrared video stream. It seemed that infrared was just another video format. Suspecting that something was amiss with setting the format using v4l2-ctl I added code to enumerate and select a format to my depthview program. What I found was that although v4l2-ctl let me pick a pixel format by index at the kernel level the only way to pick a format was via a fourcc code that the kernel already knew about. The only way to select those other formats was to patch the kernel.

Kernel Patch

Though I’ve read through Linux Device Drivers 3rd edition, and even had some patches accepted to the kernel before they were just code cleanup. When I went to read the kernel source I was extremely lucky that the most recent patch to the uvcvideo driver was to add a video pixel format.

All it took to get 6 formats was adding them and reloading the driver of the running system. With one of the updates I made a mistake that caused a kernel segfault, but that wasn’t enough to crash the system. It’s more resilient than I thought. I haven’t sent my patch upstream yet because I was trying to figure out some details of the depth formats first. Specifically the scale of the values, and if they are linear, or exponential. Patch is available here for now.

Format Details

You may have noticed that I said 7 formats at the beginning, but in the last paragraph I said 6. The YUYV format listed first in the descriptor has an index of 2 instead of 1 so when drivers are asked for the YUYV format they return the second format instead. I haven’t tried hacking in a quirk to select index 1 to see if there really is an unselectable YUYV format. This is assumed to be a bug, but it may have been key in my first day of trying to get the camera working with Linux because it is the only format that the kernel recognized. Indexes in UVC are 1 based.

All of the fourcc codes that are officially part of the UVC standard are also the first 4 characters of the GUID in ASCII. I will refer to the formats by their 4 character ASCII names as derived from the GUID’s.

Index 2 INVZ

INVZ is the default format because of the indexing bug and so it’s the format described in the first 2 blog posts.

Index 3 INZI is a 24 bit format.

The first 16 bits are depth data, and the last 8 are infrared.

Index 4 INVR is another 16 bit depth format.

The difference from INVZ is currently unknown.

Index 5 INRI is another 24 bit 16:8 format.

Presumably there are two combined formats so that either depth format can be used with infrared at the same time. The first two formats have Z, and the second two have R, this might be a pattern indicating how the four formats are grouped.

Index 6 INVI is the infrared stream by itself.

Index 7 RELI

A special infrared stream where alternating frames have the projector on, or off. Useful for distinguishing ambient lighting from projector lighting.

Second Driver

Before I patched the kernel to add pixel format support I looked for other drivers that might work without modification. I didn’t find any that worked out of the box, but I did find that libuvc was close. It has the additional advantage that it is based on libusb, and thus cross platform by default. The author Ken Tossell helped me out, but telling me what needed to be modified to add the real sense pixel formats. It turned out that wasn’t sufficient as it didn’t have support for two cameras on the same device yet. I decided to put it off until after I got calibration working, and this was fortunate because someone already had that part working. Steve McGuire contacted me on March 26 to let me know that my blog posts had been useful in getting the libuvc driver working with the real sense camera. I tested it today on Linux, and can confirm that it can produce color, infrared, and depth video. The example app regularly stopped responding so it might not be 100% ready, but it’s just a patch on an already stable driver so anything that needs to be fixed is likely to be minor. Libuvc is a cross platform driver so this probably means there is now open source driver support for MacOS, and Windows now, but I haven’t been able to test this yet.

Three Ways to Calibrate

-

The Dorabot team was the first to achieve a practical calibration on Linux. Their methodology was to write an app for the windows SDK that copied all of the mappings between color, and depth to a file, and then a library for Linux to use that lookup table. They first reported success on January 27. Their software was released on March 22.

-

I used a Robot Operating System (ROS) module to calibrate using a checkerboard pattern. My first success was March 23.

-

Steve McGuire wrote that he used a tool called vicalib

Amazon Picking Challenge

Both the Dorabot team, Steve McGuire’s team PickNik at ARPG as well as one of my crowdfunding sponsors are participants. In all cases the Intel Real Sense F200 was picked because it functions at a closer range than other depth cameras. The challenge takes place at the IEEE Robotics and Automation conference in Seattle Washington May 26-30. Maybe I’ll have a chance to go to Seattle, and meet them.

Robot Operating System (ROS)

I converted my demo program depthview to be part of the ROS build system both so that I could use the ROS camera_calibration tool, and the rviz visualization tool to view generated point clouds. In the process I learned a lot about ROS, and decided it was an ideal first target platform for a Linux and MacOS SDK. The core of ROS is glue between various open source tools that are useful with robotics. Often these tools were started years ago separately, and don’t have compatible data formats. Besides providing common formats, and conversion tools it provides ways to visualize data on a running system. Parts of a running ROS system can be in the same process, separate executables, or even on different machines connected via a network. The modular approach with standard data formats is ideal for rapid prototyping. People can simultaneously work together, and autonomously without concern for breaking other peoples projects. It is also better documented than most of the individual packages that it glues together. They have their own Q and A system that acts a lot like stack overflow that has had an answer for ready for almost all of my problems. The info page for every package is a wiki so anyone can easily improve documentation. The biggest bonus is that many of the people doing research in computer vision are doing it for robotics projects using ROS.

Downside of ROS

It took me a long time to get the build system working right. There are many things to learn that have nothing to do with depth image processing. Integrating modules is easiest via networking, but that has a high serialization deserialization overhead. There are options for moving data between modules without network, or even copying overhead, but they take longer to learn. The networking has neither encryption, nor authentication support. If you are using that feature across a network you will need to do some combination of firewall, and VPN, or IPsec.

Point Clouds

Pretty much every 3d imaging device with an open source driver is supported by ROS, and that provided a lot of example code for getting a point cloud display from the Intel Real Sense 3d camera. ROS is a pubsub system. From depthview I had to publish images, and camera calibration information. Processing a depth image into a point cloud typically has two steps, getting rid of camera distortion commonly called rectifying, and projecting the points from the 2d image into 3 dimensions. Using a point cloud with rviz requires an extra step of publishing a 3d transformation between world, or map space, and the coordinate frame of the camera. There are modules for all of these things which can be started separately as separate commands in any order, or they can be started with a launch file. The first time I started up this process I skipped the rectification step to save time, and because the infrared video has no noticeable distortion even when holding a straight edge to the screen. I imagine it’s possible that the processor on the camera is rectifying the image as part of the range finding pipeline, and so that step is actually redundant. When writing my first launch file I added the rectifying step, and it started severly changing the range of many points leading to a visible cone shape from the origin to the correctly positioned points. My guess is that the image rectifying module can’t handle the raw integer depth values, and I need to convert them to calibrated floats first.

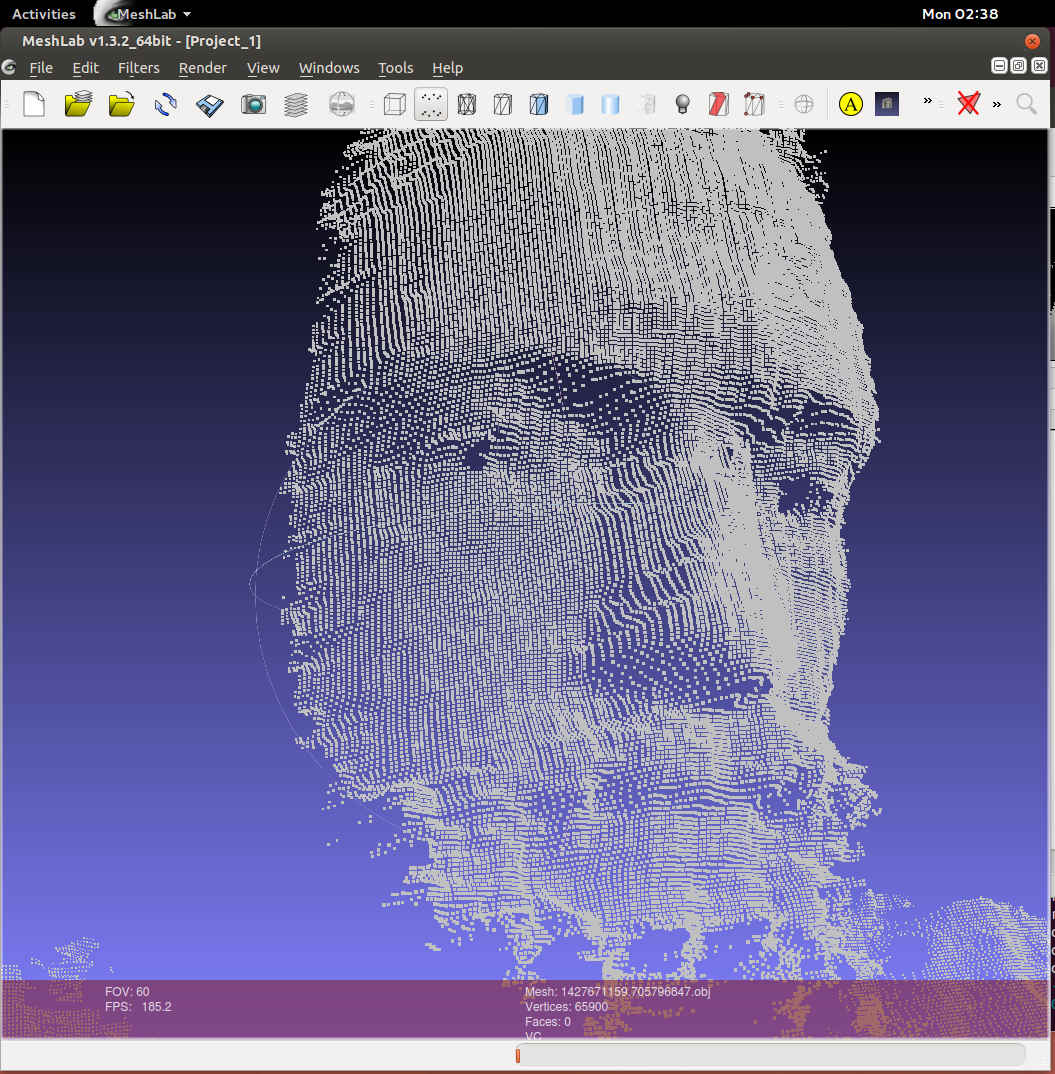

Exporting Point Clouds

ROS has a tool for recording an arbitrary list of published data into “bag” files. To get a scan of my head I started recording, and tried to get an image of my head from every angle. Then I dumped all the point clouds with “rosrun pcl_ros bag_to_pcd”. It was surprisingly difficult to find any working tool to convert from .pcd to a 3d format that any other tool accepted. An internet search found 5 years of forum posts of frustrated people. There happened to also be an ascii version of the .pcd file format, and it looks fairly similar to the .obj file format. I converted all the files with a bash one liner.

for i in *.pcd ; do pcl_convert_pcd_ascii_binary "$i" "${i%.*}.ascii" 0; grep -v nan "${i%.*}.ascii" | sed -e '1,11d;s/^/v /' > "${i%.*}.obj" ; rm "${i%.*}.ascii" ; done

Converting Point Clouds to Meshes

This step is much harder. The only decent open source tool I’ve found is called MeshLab. It’s really designed to work with meshes more than point clouds, but there don’t seem to be any good user friendly tools for point clouds specifically. It displays, and manipulates point clouds so fast that I suspect there is no inherent reason why rviz was at max getting 15 FPS. MeshLab was getting FPS in the hundreds with the same data. I had to go through a bunch of tutorials to do anything useful, but it’s workflow is okay once you get past the learning step with one major exception. It crashes a lot. Usually 5 minutes or more into processing data with no save points. There was lots of shaking of fists in the air in frustration. Building a 3d model from point clouds requires aligning clouds from multiple depth frames precisely, and connecting the points into triangles. The solver for aligning clouds needs human help to get a rough match, and the surface finder is the part that crashes.

At the moment I don’t have a good solution, but I think ROS has the needed tools. It has multiple implementations of a tool for stitching 3d maps together called Simultaneous Location And Mapping (SLAM). It could even use an accelerometer attached to the camera to help stitch point clouds together. PCL, or point cloud library has functions to do all the needed things with point clouds it just needs a user friendly interface.

Planned Features

There are three primary features that I want to achieve with an open source depth camera sdk. The obvious 3d scanning part is started. Face tracking should be easy because OpenCV already does that. Gesture support is the hardest. There is an open source tool called Skeltrack that can find joints in human from depth images which could be used as a starting point. The founder of Skeltrack Joaquim Rocha works at CERN, and has offered to help getting it to work on the real sense camera.

Next Steps

One downside of prototyping at the same time as figuring out how something works is that it leads to technical debt at an above average pace. Since knowing how to do things right is dependent on having a working prototype refactoring is around 50% of all coding time. The primary fix that needs to be done here is setting up conditional compilation so that features with major dependencies can be disabled at compile time. This will let me merge the main branches back together. The driver interface should be split off from Qt. It should interface to both working drivers so programmers don’t have to pick which one to support. It needs to pick the right calibration file based on camera serial number as part of multiple camera support. There needs to be a calibration tool that doesn’t require ROS because ROS hasn’t been ported to Windows. The calibration tool I used was just using two features of OpenCV so that should be easy.

News updates

I’ve been posting minor updates on my crowdfunding page. If you sponsor my project for any amount of money you’ll get regular updates directly to your inbox.

Business opportunities

The companies contacting me include one of the biggest companies that exist, and one of the most valued startups ever. The interest has convinced me that I’m working on something important. Most of them want me to sign an NDA before I find out what the deal is. I can’t currently afford to pay a lawyer to tell me if it’s a good deal or not. One of the companies even wants it to be a secret that they have talked to me at all. I would rather keep a secret because I want to maintain a good relationship than because of the force of law punishing me. I don’t work well with negative reinforcement. Work I have done in the past that was under NDA has made it difficult to get work because I had nothing to show for it. This project on the other hand is very open, and it has resulted in people calling me regularly to see if I can be a part of their cool startup. That has never happened before. The one with the craziest idea for something that has never been done before will probably win. In the mean time I’ll be sleeping on my dad’s living room couch where the cost of living is low unless people crowd fund me enough money to afford first months rent.