intel RealSense camera on Linux

Intel has started advertising for a new product that you can’t buy yet called RealSense. This is actually multiple products with a common brand name. There is the snapshot product which captures images from three lenses at once, and the other product is the 3D camera that uses structured light in the infrared spectrum similar to how the original kinect for Xbox works. I’m going to be talking about the 3D camera product as that is the one I have first hand experience with. Part of the brand is the software that goes with it on an intel based laptop, desktop, or mobile. The 3D camera does a very good job of creating a 3D point cloud but it’s not easy to transform a 3d point cloud into knowledge of what gesture someone is making with their hand. The software created by intel for their hardware is a very significant part.

Since this is a prerelease product I’m not going to take any benchmarks, as the numbers will be different by the time you can buy one. The work I have done with linux was on an Ivy Bridge CPU, and the official minimum requirements are a Haswell processor.

Comparison with original Kinect

I haven’t worked with the Xbox One version of Kinect so my comparison will only be with the original. A significant part of the design of the original Kinect was created by PrimeSense, a company now owned by Apple. They both use structured infrared light to create a depth image. They both have a color camera that is separate from the infrared camera, and this leads to imperfect matching of depth data to color.

The fundamental design difference is for range. The Kinect is designed for getting depth data for a whole room. The Real Sense 3D camera is for things less than 2 meters away. The Kinect has a motor in so that the computer can automatically adjust the tilt to get people’s whole bodies in frame. The Real Sense camera is used at a short distance so people can easily reach out to the camera, and manually adjust it. The Kinect has 4 microphones in order to beamform, and capture voice from two separate game players. The Real Sense camera only needs two to capture from one person at close range. The Kinect has a relatively grainy depth sensitivity of around a centimeter sufficient for skeleton tracking of a human. The Real Sense camera has depth precision fine enough to detect gender, emotion, and even a pulse. The Real Sense 3D camera is also much smaller comfortably resting on the top bezel of a laptop, and doesn’t require a power brick. The original Kinect is a USB 2.0 device, and that made it impossible to have the latency, or resolution of the Real Sense 3D camera.

Windows Experience

Intel treated everyone at the “Hacker Lab” very well. We were provided with a high performance laptop for the day preloaded with all the tools we needed to develop apps for the 3D camera on windows. The meals were catered, and the coffee plentiful.

The C, and C++ parts of the Beta SDK are closer to done than other parts. The binding for the javascript, and Unity libraries is clever in that is uses a daemon with a websocket to communicate. I think this means it should be possible to write a binding for any language, and work on any modern browser. I opted to use the Processing framework with java as I’ve had good luck creating 3D demos with it quickly. I was told that it’s one of the newer parts of the SDK, and not as complete. Even so I managed to get the data I needed without too much trouble. The trickiest part for me was that processing doesn’t natively support quaternions for rotation, and I managed to get the basis vectors for x, y, z, w mixed up or backwards when converting to a rotation matrix. When I got everything right I was impressed with how perfectly my rendered object matched the position, and orientation of my hand. I couldn’t notice any latency at all. I was very impressed.

Linux!

My laptop came with windows 8, and I went to a lot of trouble to setup multiboot with ubuntu, but I almost never used windows, and it was taking up half the hard drive so I nuked it a while ago. Now I want to make things with my new 3D camera that only supports Windows. Bummer. Oh wait the Kinect only lasted a week before there was open source support for it. Maybe it’s not so hard to get the Real Sense 3D camera working in the same way.

First up lets plug it in, and see what gets detected.

/: Bus 04.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/4p, 5000M

|__ Port 2: Dev 16, If 0, Class=Hub, Driver=hub/2p, 5000M

|__ Port 1: Dev 17, If 0, Class=Video, Driver=uvcvideo, 5000M

|__ Port 1: Dev 17, If 1, Class=Video, Driver=uvcvideo, 5000M

|__ Port 1: Dev 17, If 2, Class=Video, Driver=uvcvideo, 5000M

|__ Port 1: Dev 17, If 3, Class=Video, Driver=uvcvideo, 5000M

|__ Port 1: Dev 17, If 4, Class=Vendor Specific Class, Driver=, 5000M

/: Bus 03.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/4p, 480M

|__ Port 2: Dev 22, If 0, Class=Hub, Driver=hub/2p, 480M

|__ Port 2: Dev 23, If 0, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 2: Dev 23, If 1, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 2: Dev 23, If 2, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 2: Dev 23, If 3, Class=Human Interface Device, Driver=usbhid, 12M

Drivers bound to it without my doing anything beyond plugging it in. The microphone array showed up in my sound control panel as a working device. I already have a built in webcam as video0. Plugging in the Real Sense camera added /dev/video1, and /dev/video2.

Video1 is just a normal 1080P color webcam, and any software that works with video was happy with it. Video2 was more difficult. It’s the source of the depth image. The problem is that the USB Video Class (UVC) doesn’t have a specification for depth as a pixel format. When applications open the camera they try to pick their favorite format, and find it doesn’t exist. Lets look at the supported formats for the Depth camera.

$ v4l2-ctl --list-formats --device=/dev/video2

ioctl: VIDIOC_ENUM_FMT

Index : 0

Type : Video Capture

Pixel Format: 'YUYV'

Name : YUV 4:2:2 (YUYV)

Index : 1

Type : Video Capture

Pixel Format: ''

Name : 5a564e49-2d90-4a58-920b-773f1f2

Index : 2

Type : Video Capture

Pixel Format: ''

Name : 495a4e49-1a66-a242-9065-d01814a

Index : 3

Type : Video Capture

Pixel Format: ''

Name : 52564e49-2d90-4a58-920b-773f1f2

Index : 4

Type : Video Capture

Pixel Format: ''

Name : 49524e49-2d90-4a58-920b-773f1f2

Index : 5

Type : Video Capture

Pixel Format: ''

Name : 49564e49-57db-5e49-8e3f-f479532

Index : 6

Type : Video Capture

Pixel Format: ''

Name : 494c4552-1314-f943-a75a-ee6bbf0

That’s a whole lot of mystery formats. I didn’t actually notice any differences in how each format displayed. Formats can be picked by index with this command. Just replace “1” with the format you want to try.

$ v4l2-ctl --device=/dev/video2 --set-fmt-video=pixelformat=1

gstreamer conveniently has a hexdump output.

$ gst-launch-0.10 v4l2src device=/dev/video2 ! fakesink num-buffers=1 dump=1

Here is a snippet.

00095ef0 (0x7f00f13f9f00): 94 37 8c 37 4f 37 00 00 f7 33 c6 33 bd 33 c6 33 .7.7O7...3.3.3.3

00095f00 (0x7f00f13f9f10): cf 33 d4 33 de 33 e8 33 f2 33 13 34 17 34 0a 34 .3.3.3.3.3.4.4.4

00095f10 (0x7f00f13f9f20): f8 33 e5 33 e2 33 cd 33 c2 33 bd 33 ae 33 a5 33 .3.3.3.3.3.3.3.3

00095f20 (0x7f00f13f9f30): 91 33 73 33 5f 33 4b 33 67 33 8a 33 95 33 bd 33 .3s3_3K3g3.3.3.3

00095f30 (0x7f00f13f9f40): 07 34 2a 34 38 34 e4 33 23 33 c3 32 5e 33 8f 33 .4*484.3#3.2^3.3

00095f40 (0x7f00f13f9f50): d3 33 15 34 53 34 32 34 91 33 f0 32 97 32 60 32 .3.4S424.3.2.2`2

00095f50 (0x7f00f13f9f60): 0c 32 e2 31 e8 31 e8 31 0f 32 00 00 00 00 00 00 .2.1.1.1.2......

I guessed 16 bit little endian. You can also dump the raw binary of a frame to a file.

gst-launch-0.10 v4l2src device=/dev/video2 num-buffers=1 ! multifilesink location="frame%05d.raw"

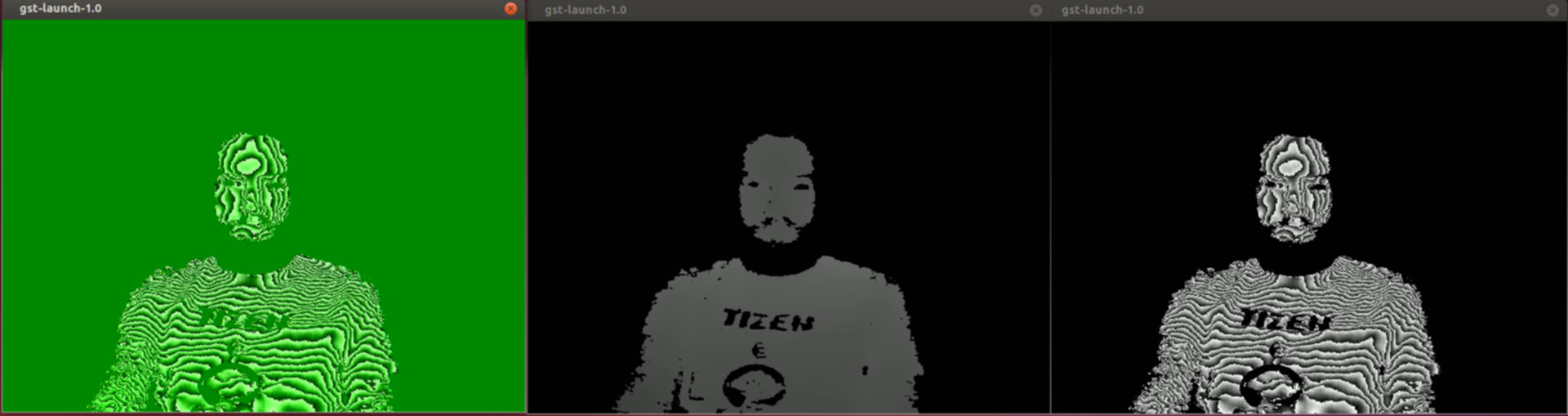

gstreamer tries to automatically determine the correct format, but in this case it needs to be told manually if you want anything other than a green topographic map appearance.

gst-launch-0.10 v4l2src device=/dev/video2 ! videoparse format=GST_VIDEO_FORMAT_GRAY16_LE width=640 height=480 ! autovideoconvert ! autovideosink

Using the Intel SDK from Linux

The official Intel SDK is windows only, but thanks to modern virtual machines you can run windows on Linux. Since the only copy of windows I have is the system restore that came with the laptop I didn’t have anything convenient to install. I found on the Intel forums that the Real Sense SDK was reported to work with windows 10 preview.

I tried virtualbox first, and found it easy to install, but the USB passthrough supports USB 2.0, but not 3.0, and the Real Sense camera is 3.0 only because it really needs that much bandwidth.

I think Linux KVM will probably work, but it took me more than 10 minutes to figure out how to get the windows installer to run so I moved on.

VMware Player worked perfectly. It even detected the ISO as being windows 10 preview. I have found limitations in the resolution of video streams it can handle. I think this is a limitation on how fast the USB passthrough works rather than a performance issue with anything else. It is fast enough to handle the depth feed at 30 fps which is all you need to get SDK examples working. Your experience might be different with a newer computer. So far the only examples that didn’t work were because I didn’t have appropriate tools installed like the Unity development demos that require Unity. Pretty great for a prerelease product with a beta SDK on a beta OS in a virtual machine. My expectations have been exceeded.

Wireshark

It’s possible to look at the raw USB traffic with wireshark. Since the camera works fine with drivers built into Linux the primary interest would be to figure out the parts that weren’t obvious. I haven’t figured out how to get an infrared feed from Linux yet. I’m guessing that it’s activated through a vendor extension of UVC. The other mystery is if there is factory calibration data available. This produces data on the order of a gigabyte a minute so it’s a significant heap of data.

Gstreamer note

I’m using gstreamer-0.10 instead of gstreamer-1.0 because with 1.0 I have to reset the camera before each use. Physically unplugging it, and doing a USB reset both work. The software reset is much faster. See [1] for info on how to do a USB reset through software.

Conclusion

Intel’s Real Sense 3D camera is great technology. While I would love to see support in the form of an official intel SDK for Linux with all the impressive features the windows SDK has it’s not actually a requirement to use the camera included in the software development platform kit. It’s new technology so the standard doesn’t have a specification for depth formats. The standard allows for vendor extensions so intel was able to create a product that works with drivers that already exist. It’s only userspace applications that fail because they don’t know how to interpret the data they get.

Next steps

I’d like to build an app that gets the data into a point cloud, and has basic OpenGL visualization of the data as it’s recorded. I definitely want something useable with Point Cloud Library, and Meshlab. I would like to use that as the basis of a tutorial on how to use the camera to do useful things. I would also like to write a websocket app to make it easy to get the data into other applications such as a web browser.

If you like what I’m doing you could send me money so I can keep working on it full time. Crowdfund 3D